AI once followed rules. Now it creates ideas.

Let’s dive into the exciting journey of Generative AI—exploring its evolution, one milestone at a time!

The Post-War Spark (1950s)

The Second World War had ended, but amidst the global efforts to rebuild, a different kind of spark ignited. A few brilliant minds began to gaze far beyond the horizon, not at bombs or battlefields, but at the very nature of thought itself.

In this era of curiosity, the conversation around machine intelligence officially began. In 1956, at the Dartmouth Workshop, computer scientist John McCarthy formally coined the term “Artificial Intelligence,” giving a name to the daring new field.

But a few years earlier, British mathematician Alan Turing had already planted the seed. In his 1950 seminal paper, “Computing Machinery and Intelligence,” he posed a deceptively simple question: “Can machines think?” To answer it, he proposed “The Imitation Game,” a conversational test we now know as the Turing Test. This thought experiment challenged us to consider if a machine could ever convince a person it was human, forever changing our relationship with technology and setting the stage for a revolution that would one day learn not just to think, but to create.

Did you know? As of this writing, no machine, algorithm, or AI has successfully passed the Turing Test—a benchmark for human-like intelligence proposed over 70 years ago.

From ELIZA to SIRI

In the 1960s, long before the AI could dream up poems or images, the world met its first chatbot — a program named ELIZA from the labs at MIT. It mimicked a psychotherapist by rephrasing users’ statements into probing questions — “Tell me more about your mother” — and to the surprise of its own creator, people poured their hearts out to it. Some formed deep emotional bonds, believing it truly listened. While it was a technical marvel, its creator, Joseph Weizenbaum, saw it as a parody, not a true intellect. Yet the episode revealed something profound about us: humans are eager to believe the machine cares.

Decades later, this yearning for connection would surface again in pop culture — remember Raj from The Big Bang Theory? He fell for Siri, his iPhone assistant, treating her with the same affection he reserved for a real date. Beneath the laughter, that storyline underscored an enduring truth: our instinct to seek companionship in machines is as strong as our drive to build them.

AI Winters

The early optimism of AI’s first decades could not last forever. A bitter frost began to set in as the gap between soaring promises and disappointing reality grew too wide. While programs like ELIZA captivated the public, their clever but shallow tricks only highlighted how far machines were from true understanding. The field of AI entered a period of deep freezes. The evaporation of funds led to the freezing of AI — what an irony, money turned out to be both the heat and the ice.

The first winter hit in the late 1970s, after early hopes in machine translation and simple neural networks fizzled. The second, more severe frost followed in the late 1980s, fuelled by the collapse of the LISP machine market and other high-profile failures. During these periods, the term “AI” itself became toxic — researchers often rebranded their work as “informatics” or “machine learning” just to stay afloat.

Yet even in the cold, embers glowed. In 1997, LSTM networks gave machines a kind of memory to better understand speech and writing, and that same year IBM’s Deep Blue defeated world chess champion Garry Kasparov, proving machines could compete — not just compute. Scientists also quietly tinkered with neural networks and n-gram models, keeping the dream alive with little more than persistence and hope. This underground progress, largely invisible to the outside world, laid hidden roots that would burst forth in 2006 with what is now called the deep learning renaissance. Think of the AI Winters like seasonal dormancy: on the surface, the branches looked bare, but underground, the roots kept growing — waiting patiently for spring.

Deep Learning Renaissance

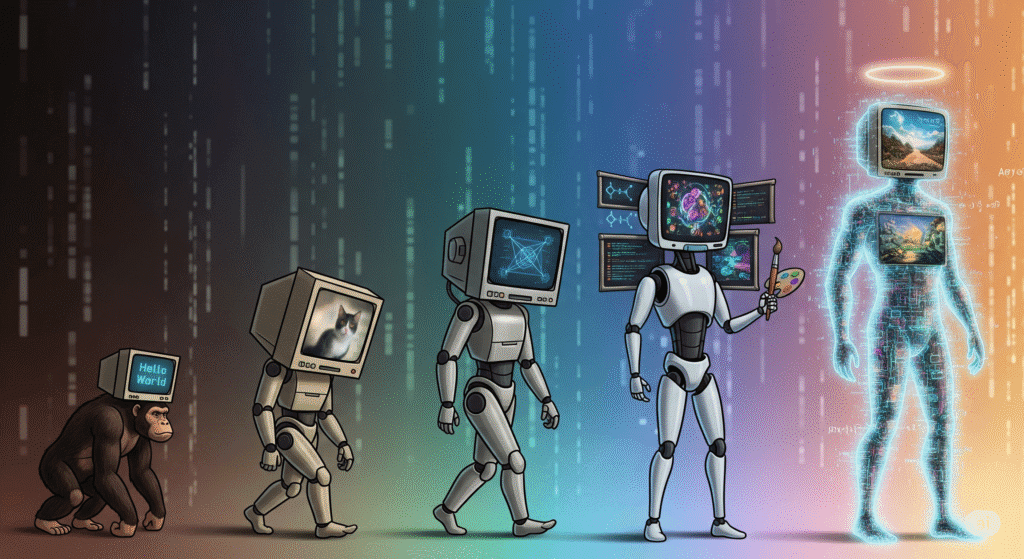

By the mid-2000s, the long winter finally began to thaw. In 2006, a small group of researchers reignited excitement with a breakthrough in training deep neural networks — suddenly, machines that once stumbled began to see and recognize. The real turning point came in 2012, when a deep learning model stunned the world by crushing the ImageNet competition, a global challenge in computer vision. Overnight, “AI” was no longer a dirty word; it was the future. This revival, often called the Deep Learning Renaissance, set the stage for a new era of creativity. Within just a few years, new models like VAEs and GANs would move beyond recognition to imagination — teaching machines not just to classify the world, but to create new worlds of their own.

The Great Acceleration

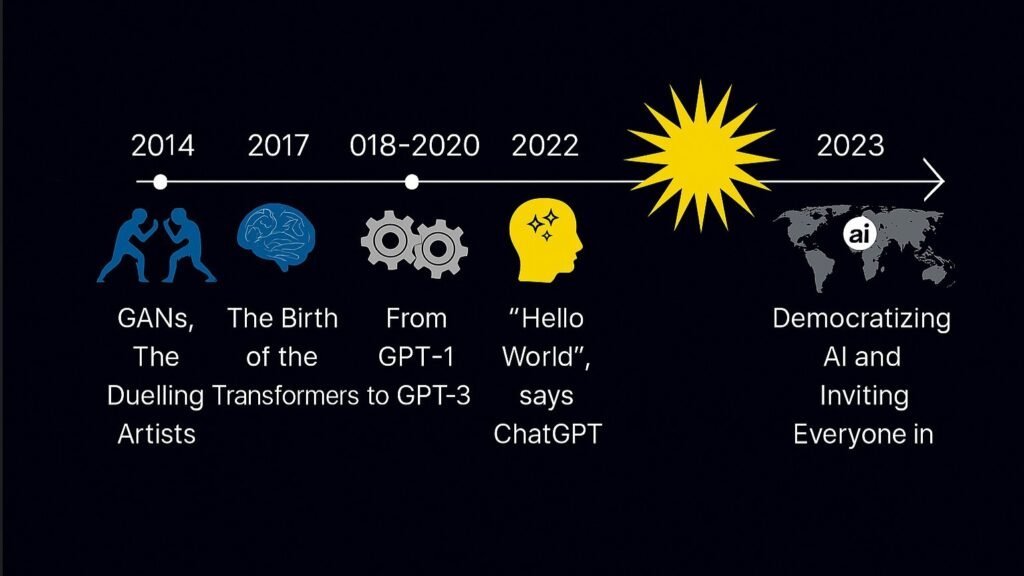

In the mid-2010s, the world of artificial intelligence was still confined to labs and academic papers. But in 2014, a quiet spark lit a creative fuse. Ian Goodfellow introduced a concept so daring it felt almost magical: the Generative Adversarial Network, or GAN. Imagine two AIs locked in a fierce, creative duel—one a forger trying to create, the other a detective trying to expose the fake. This endless competition pushed them both to new heights until the AI could paint portraits of people who never existed and conjure landscapes no camera had ever seen. For the first time, machines weren’t just crunching data; they were dreaming.

The next whisper came in 2017. A team of Google researchers published a paper with a humble title: “Attention Is All You Need.” Few noticed it then, but this paper introduced the transformer architecture, the foundational engine that would soon power a revolution. In 2018, the first of the great generative models appeared: GPT-1. It was a research curiosity, a quiet proof of concept that showed what a transformer could do, but it never touched the public.

Then came the drama. In 2019, GPT-2 emerged, and suddenly the world paid attention. Its writing was startlingly fluent—spinning poems and stories that felt uncomfortably human. The creators, worried about misuse, initially hesitated to release the full model, locking it away like forbidden fruit. That hesitation only deepened the intrigue and fuelled a global curiosity: if it was too powerful to share, what must it be capable of? Eventually, the full model was made public for researchers and hobbyists, which they could download and use in programming languages like Python, provided their computer was powerful enough, but the real revolution was still yet to come.

The true unveiling arrived in 2020. GPT-3 was too big and too ground-breaking to give away, so it was offered as a paid API. This was a crucial turning point. GPT-3 wasn’t a product you could download; it was an engine you could plug into. It became a utility for developers and businesses—the engine that would power the apps of the future. The next year, Microsoft and GitHub showcased one of the first and most powerful of these applications: Copilot, a coding assistant that showed the world what a commercial product built on GPT-3 could do.

But all of this was just a prelude to the main event. In late 2022, the world experienced a true cultural explosion with the launch of ChatGPT, powered by GPT-3.5. Suddenly, the long-imagined dream of talking to a machine became a lived reality for everyone. It was free, accessible, and instantly addictive. Millions rushed in overnight, not because they needed it, but because they had to experience the wonder. It hit one million users in just five days, a speed of adoption faster than Instagram, Netflix, or Spotify. The long-imagined dream of talking to a machine, once a thought experiment, was now a daily conversation.

What’s Next?

Today, the revolution is not slowing down—it’s accelerating at warp speed. Just last week, GPT-5 was launched, pushing the boundaries of what these models can achieve. The rivalry is intense, with Google’s Gemini now competing directly with GPT. Microsoft is embedding Copilot into its Office 365 and Power BI offerings, making AI a seamless part of professional life. Every company, from tech giants to small businesses, is now either creating new AI products or finding ways to use them to solve problems. The branches on the surface may look bare to some, but this is no longer a quiet winter—it’s a full-blown revolution, and its roots are growing faster than ever.

We are living in an age where the term “AI” itself has become a source of fatigue for many, but this is just the beginning. The reality is that the AI revolution has already begun. As the saying goes: “The technology of tomorrow is the road roller of today; if you don’t become part of the roller, you become part of the road.” So, let’s continue this journey together, shaping its direction and its path. I’ll see you on the road ahead.