What you see is not always what you get!

This post is not aimed at blaming any person, company, or analyst. Examples are used for educational purposes only.

It was a textbook moment—one that stuck with me.

I had just started my Data Visualization 101 module as part of my master’s program. And in the very first lecture, the professor said, “You can mislead with the visuals. So use your power for good, not bad.”

I remember thinking, “Wait… how can a chart mislead someone if the data is correct?”

The lecturer smiled, as if he knew what’s going on in my mind, and in the minds of 100s of other students who had enrolled for this course, and clicked to the next slide. Suddenly, I wasn’t so sure anymore.

Example 1: Exit Polls

So the first example? Super basic. Exit polls. You know, predicting who’s gonna win. The gap between the two parties? Just 0.2%. Barely anything.

But the chart? Whoa. The y-axis didn’t start at zero—it started halfway up. That tiny difference suddenly looked massive. Like one party was totally crushing the other.

And get this: the data itself wasn’t wrong. The numbers were legit. No lies, no hidden stuff. But the way it was shown? That’s where things get sketchy.

See, it’s all in the presentation. Colors, angles, axis, scale—tiny tweaks can completely flip how something feels. You look at that chart and think, “Wow, that’s a huge lead,” when it’s barely there.

That’s not just sloppy design. That’s misdirection.

And the worst part? Sometimes it’s 100% intentional.

Example 2: Who's More Popular?

Can you guess who’s winning just by glancing? Of course you can—because that’s the point. The graphic invites you to skim, assume, feel. No need for messy things like math or context.

The moment your eyes hit the chart, Leader B looks like they’re the champion. A strong upward tilt. A proud finish. The kind of visual that screams, “Victory!” But here’s the twist: Leader B starts at a 3% and ends at 19%. It’s a significant jump, yes—a nearly fivefold climb. Story of growth, persistence and resilience.

But the illusion goes beyond this data!

🧩 The axis—quietly manipulated. Instead of starting at zero, it starts just high enough to squash Leader A’s head start and stretch Leader B’s rise.

🔍 The font—subtly tweaked. Leader A, despite still holding a solid 37%, is demoted with smaller text.

🎨 The colours—delicately chosen. Leader B gleams in bold hues, radiating momentum. Leader A fades into the backdrop.

And most importantly: chart that suggests these two are neck-and-neck, showing a same trend. Worse, it dares imply that Leader B has surpassed Leader A. But in reality? Leader A remains nearly twice as popular.

In this example, no lies were told. Just the truth, but distorted. The numbers stayed honest—but the story didn’t. They staged perception, stretched sentiment, and turned a modest climb into the headline act.

🕰️ It’s not just a misleading visual. It’s emotional propaganda disguised as design. It doesn’t say “Leader B is the best.” It whispers it. Through layout, spacing, and the subtle art of graphic suggestion.

So next time you glance at a chart and feel its message before reading the numbers—pause. You might just be watching a story someone wants you to believe.

A Case Study in Queensland (AU) Youth Crime

Enough theory—let’s dive into a real-world example.

In the recent past, headlines across Australian media lit up with concern over youth crime in Queensland. News segments ran frequently. Charts were shown. Politicians weighed in. The message? Youth offender rates were rising. Queensland, it seemed, was in trouble.

But let’s pause. What exactly is the Youth Offender Rate? : Youth Offender Rate refers to the number of individuals aged 10–17 who are proceeded against by police for criminal offences, typically expressed per 100,000 persons in that age group.

Now, yes—the rate did increase between 2021–22 and 2022–23. That’s true. But is it the full story?

Not quite.

Data Source: Australian Bureau of Statistics (Table 20)

Let’s rewind to 2021–22. Australia was still under the shadow of COVID-19. Lockdowns. Curfews. Social distancing. People weren’t out and about—not because crime had vanished, but because movement itself had vanished.

So naturally, youth crime rates dipped. Fewer interactions. Fewer opportunities. Fewer offences.

Then came 2022–23. Restrictions eased. Life resumed. And with it, so did the numbers. The rise wasn’t a crisis—it was a rebound.

Zoom out beyond those two years, as shown in the line chart, and you’ll see a 15-year trend that tells a very different story. According to the official numbers, the youth offender rate has in fact, plummeted since the late 2000s.

So yes, there was a bump. But in the grand scheme? It’s barely a ripple.

And here’s the kicker: Queensland’s youth crime rate isn’t even the worst. As shown in above line graph, states like Northern Territory, Western Australia, and Tasmania consistently show a higher Offender Rate than Queensland.

So when media outlets spotlight Queensland as the epicentre of youth crime, they’re ignoring the broader landscape. It’s not just misleading—it’s emotionally manipulative.

This case study isn’t just about numbers. It’s about how data can be framed to stir emotion, drive policy, and shape public perception.

A short-term spike, taken out of context, becomes a headline. A long-term decline, quietly ignored, becomes invisible. And that’s the danger of selective storytelling.

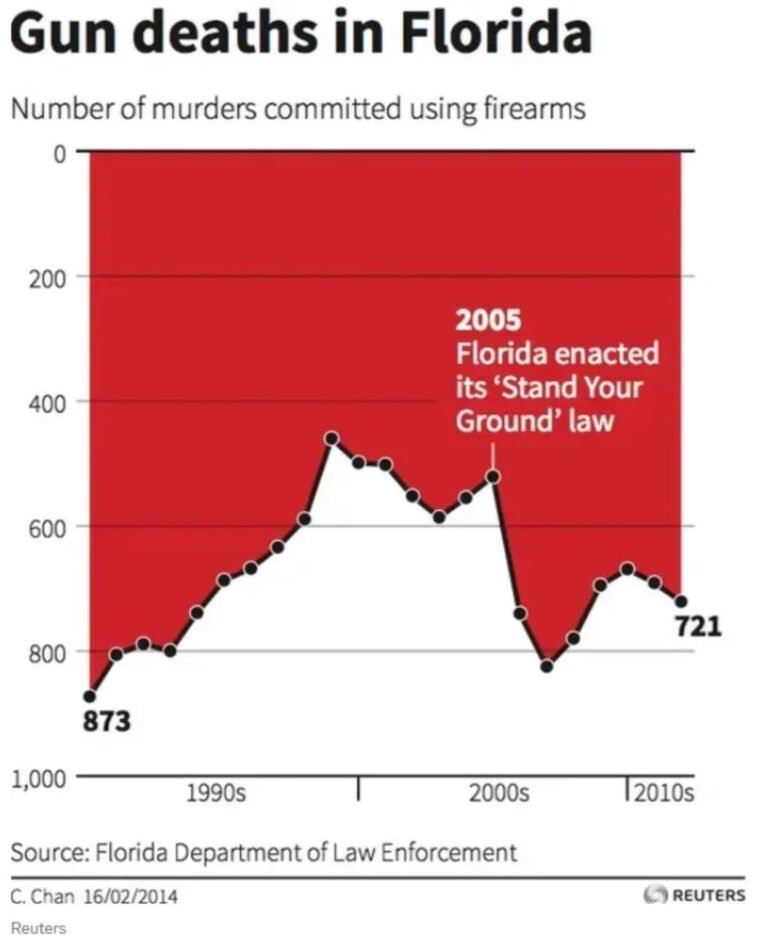

Red Flags and Reversed Realities: A Classic Illusion

This discussion on misleading with visuals is incomplete without this classic illusion.

There it is— bold, dramatic, and unsettling.

The headline read: “Gun Deaths in Florida.” And beneath it, a river of dark crimson surged down a chart. The color choice wasn’t subtle — it screamed violence. It looked like a crime scene translated into pixels.

At first glance, panic sets in. The trend line appears to climb ominously. Your eyes follow the dark red from left to right, and it feels like an escalating crisis. Especially with 2005 marked as the year the “Stand Your Ground” law was enacted — a visual cue that seemingly says, Look what happened after that.

But here’s the trick: the Y-axis is reversed. The chart starts at 0 at the top and climbs downward, meaning the higher the line dips, the fewer deaths occurred. That terrifying plunge? Actually, a decline in firearm-related murders.

Still, who would notice at a glance? Most viewers wouldn’t dissect the axis values — they’d absorb the emotional impact. The design whispers urgency, fear, danger. The data quietly disagrees.

And that’s the danger. Not of misinformation, but of misperception. A graphic like this doesn’t lie — but it doesn’t tell the truth cleanly, either. It lets fear run ahead of facts.

Conclusion and Food for Thought

All the above visuals weren’t just wrong. They were beautifully wrong. They had polish, colour, and creativity. They even conveyed the data… just not the truth.

The best analysts? They’re like magicians.

They know what catches your eye… and what slips past unnoticed. They can mislead without ever distorting the data. And even when all the numbers check out, the visual still might not.

So how do we train ourselves to see past the illusion?

How do we judge not just what’s shown—but how it’s shown?

These questions sparked a journey: collecting examples, sharpening my critical eye, diving into design ethics. I realized quickly—this topic isn’t just fascinating, it tests your integrity, your intuition, your storytelling, and your scepticism.

Feeling curious yet? Wondering what makes a visual good versus dangerous?

In my next post, I’ll walk through how to assess visuals for truthfulness, clarity, and impact. Till then, stay tuned — and keep your analytical wand at the ready. 🪄